Gestures for the next generation of smartwatches

Background

The innovation team from our client developed two technologies to interact with smartwatches: a multitouch bezel and a touch-skin sensor to control the device touching the back of her hand.

Scope

Project's scope was to define relevant use cases and user interfaces to leverage the user experience. It was crucial to our client to create both a vision supported by a strategic framework and concrete evidence, embodied in designs and a working prototype. The project helped our client to get a new budget and bring this technology forward.

My role

UX framework definition

UI design

User Testing

Desgin Lead

Strategy

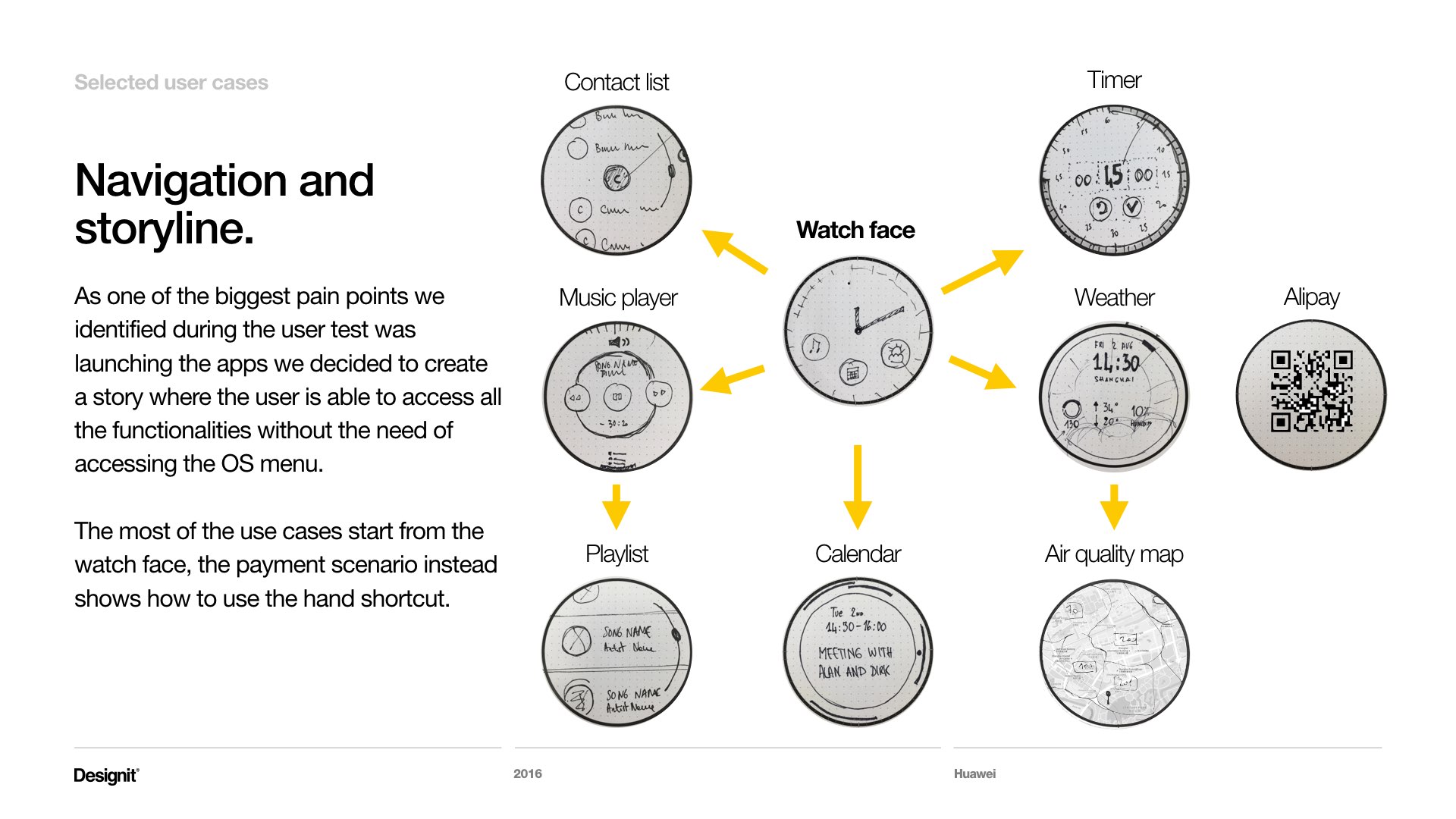

Use cases and storytelling

Results

Project's scope was to define relevant use cases and user interfaces to leverage the user experience. It was crucial to our client to create both a vision supported by a strategic framework and concrete evidence, embodied in designs and a working prototype. The project helped our client to get a new budget and bring this technology forward.

The Process

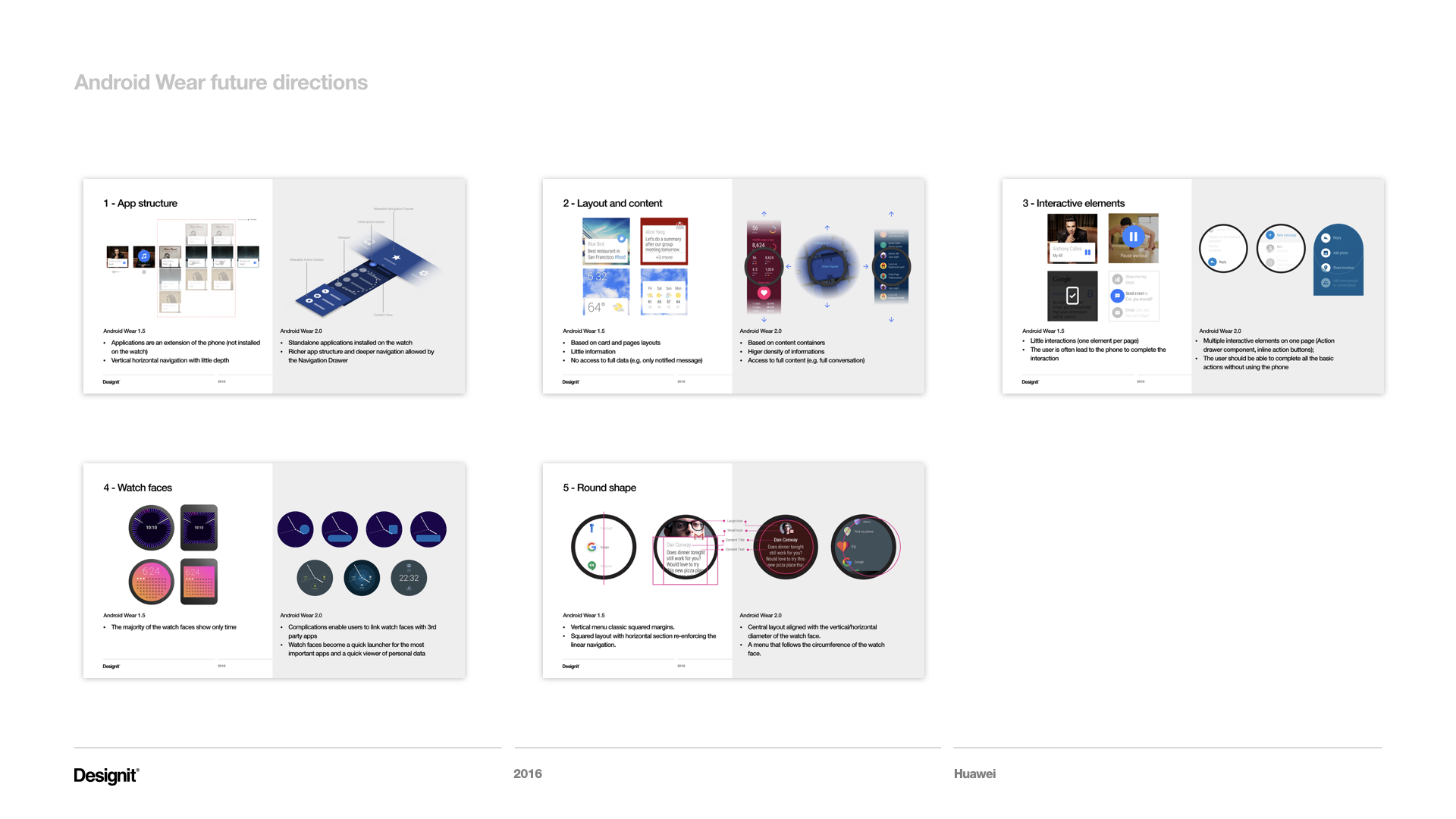

Research

Desk research and 24h survey to explore the current usage of a smartwatch, with and without a smartphone. We understood which are the most used application for smartphones and/or for smartwatches, their contexts of use, and the gestures currently adopted.

Strategy and ux principles

The research insights led me to define the strategic approach; its output covered: competitors analysis, users insights, opportunity spaces and design principles.

Ideation

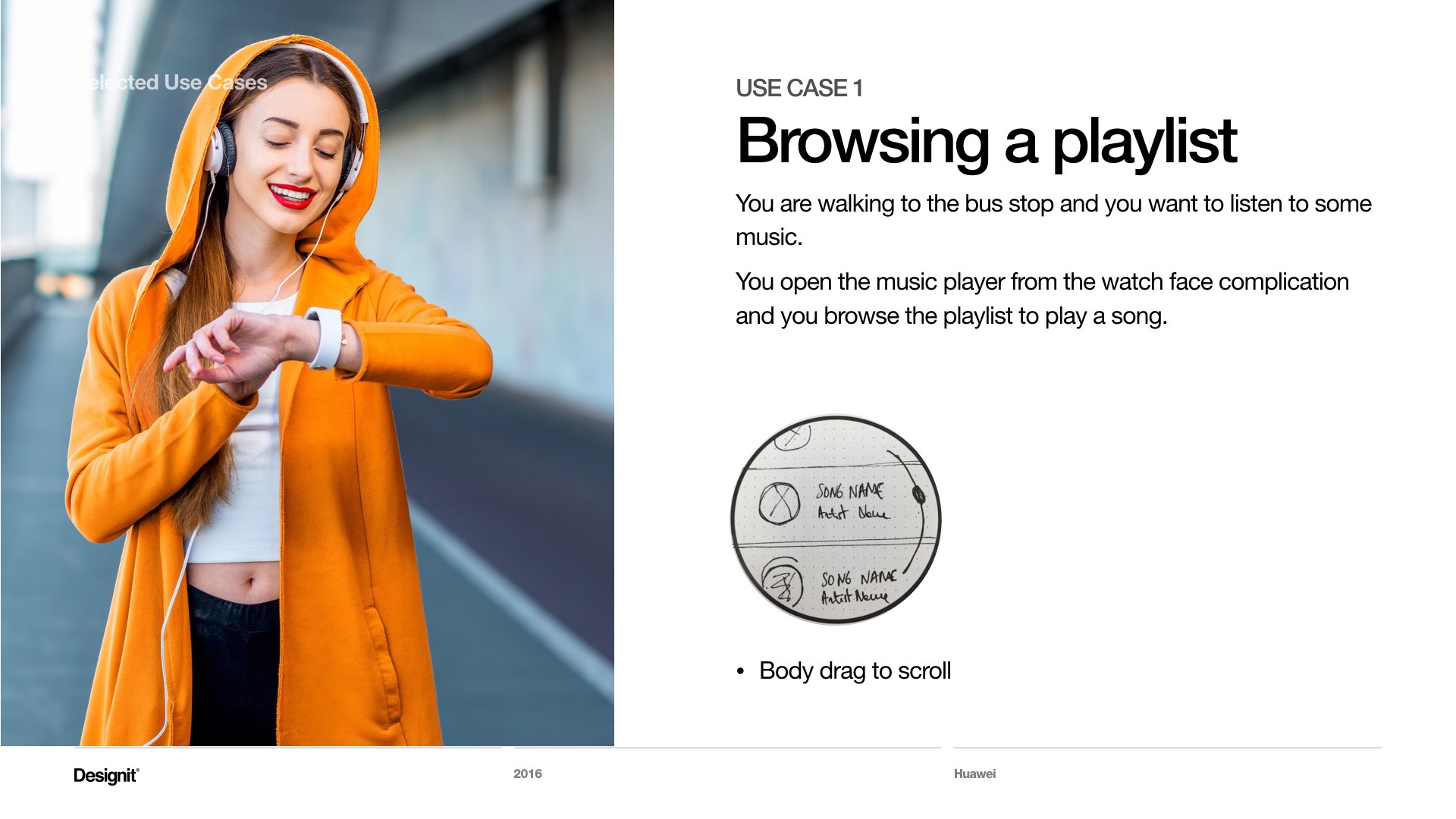

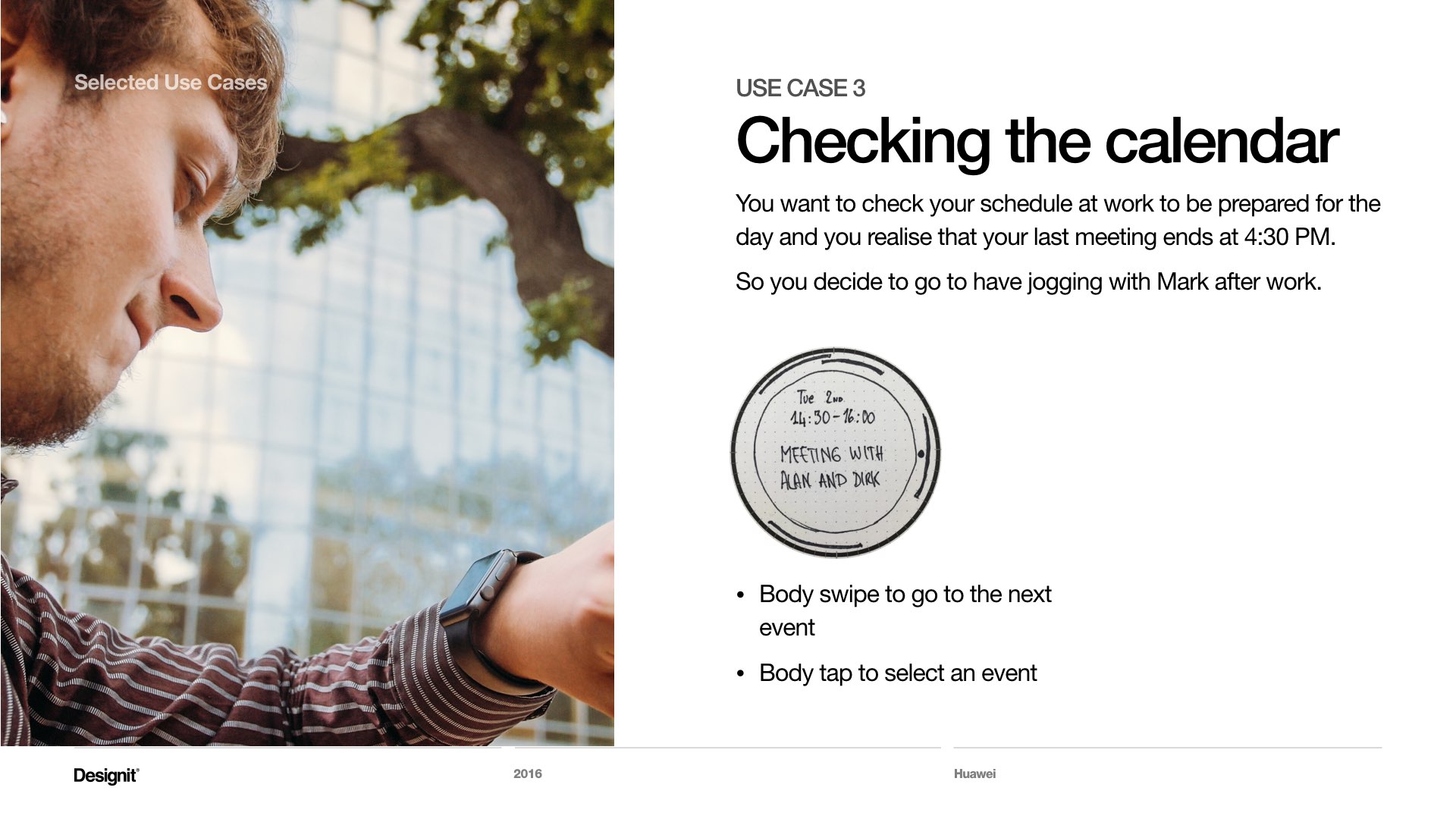

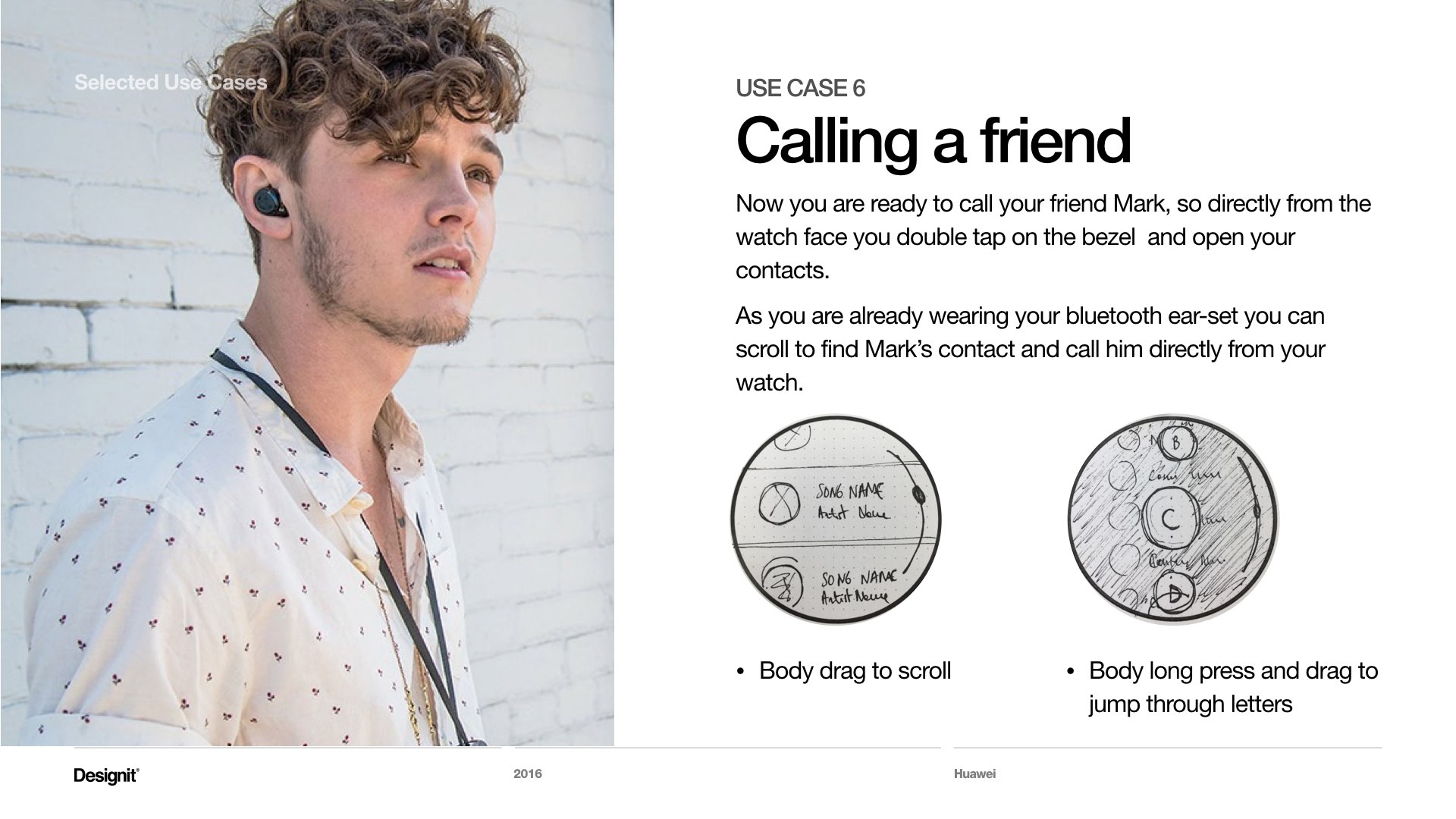

The next phase was to define the best use cases to leverage this technology. We could use the strategy and the design principles to navigate through users needs, gesture ergonomic, contexts of use, UI potential, USP for the technology. The use cases were ideated by running several sketching sessions in crazy eight style, prioritising and iterating on the most promising ones.

Users interviews

We run users interviews both in Germany and China, with two goals: more insights on users' current behaviour with smartwatches and test the acceptance of our scenarios.

We used paper prototypes to find out which gestures were the most natural ones, test their reaction to the UI, and validate the relevance of the selected use cases.

The setup consisted of wireframed interfaces printed on round stickers applied to a real watch. We presented two screens per time, and we asked users what they would do to go from one screen to the other.

Use cases iteration

We refined use cases and UIs through progressively higher fidelity sketches.

Screen design

After defining the visual style direction, we designed the screens through quick iterations using Sketch and mirroring the output onto the device. We used Principle for mac to build rough animations and test our ideas before spending too much time on screen refinements.

Animations

We prepared a set of refined animations to envision the user's interactions both for client stakeholders and our developer.

Animations were prototyped using Principles for mac first, both to give us a better understanding of the interactions and to showcase them to the developer. The refined version used to present it to the client was produced using Aftereffects.

Technical Demonstrator

The use cases and the narration were refined to fit in one single application, developed for Android. The client could install this application on a working hardware prototype and demonstrate the potential of the touch-bezel and the skin-touch sensors.

What happened next?

The project succeeded in showing the potential of these two new input technologies: the client's management was positively impressed and decided to bring the development further. The production team took over to challenge feasibility and build a sounding framework based on our concept.

Recently Huawei patented the technology but hasn't revealed yet how they are going to use it. Looking forward to seeing some of our work in the next version of their smartwatch!